Battleships Server / Client for Education

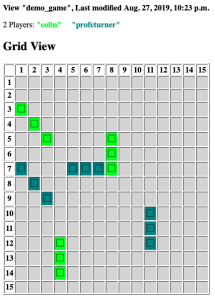

I've been teaching a first year introductory module in Python programming for Engineering at Ulster University for a few years now. As part of the later labs I have let the students build a battleships game using Object Oriented Programming - with "Fleet" objects containing a list of "Ships" and so on where they could […]

Python script to randomise an m3u playlist

While I'm blogging scripts for playlist manipulation here is one I use in a nightly cron job to shuffle our playlists so that various devices playing from them have some daily variety. All disclaimers apply, it's rough and ready but WorksForMe (TM). I have an entry in my crontab like this 0 4 * * […]

Python script to add a file to a playlist

I have a number of playlists on Gondolin, which is a headless machine. I wanted to be able to easily add a given mp3 file to the playlists which are in m3u format. That means that each entry has both the filename and an extended line with some basic metadata, in particular the track length […]

Migration from Savane to Redmine

I am admin for a server at work foss.ulster.ac.uk to host our open source development work. It used to run on GNU Savane, but despite several efforts, that project is clearly dead in the ditch. So having to change the underlying system, I decided to move to Redmine (you can see some previous discussion here). […]

Garbage collecting sessions in PHP

In PHP, sessions are by default stored in a file in a directory. Sessions can be specifically destroyed from within the code, for example when users logout explicitly, but frequently they do not. As a result session files tend to hang around, and cause the problem of how to clean them up. The standard way […]

My first Munin plugin

Munin is a great, really useful project for monitoring all sorts of things on servers over short and long term periods, and can help identify and even warn of undue server loads. It is also appropriately and poetically named for one of Odinn's crows (so I suppose I should have written this on a Wednesday). […]

Geany and other Development Tools

I've tried lots of programming editors and ides over the years, obviously in Unix and Linux this is a Holy War, particularly between the advocates of vi and emacs. It is common for both groups to suggest that the other editor is hopelessly over-complex or clumsy. I think there's some truth in that, because essentially, […]

Drupal Login Problems

So, in order to post that rant about PHP and SimpleXML I had to fix a problem that seems to have spontaneously arisen with Drupal (this content management system). For some reason it wasn't persisting login information, at least from firefox (sorry - iceweasel here on my Debian system). It's interesting to note, reading about […]

SimpleXML should be called BloodyAckwardXML

Another night of coding in PHP, and I've officially decided that SimpleXML utterly irritates me. I'd already discovered, much to my irritation, that is virtually impossible to handle SimpleXML objects elegantly with the Smarty template engine - but now I discover I can't even shove them in a PHP session without trouble - when you […]