Software Security, Open Source, and the xz affair

Recently, the Free and Open Source (FOSS) community, and especially the Linux ecosystem part of it, has been shocked by a malicious backdoor being inserted in the xz compression library, apparently with a goal to compromise SSH (Secure Shell) connections. You can read about the details in articles from the Register, Ars Technica, and a great curated and explained timeline here. The backdoor had made it into the experimental / unstable / testing Linux distributions, but not, happily into most Stable distributions. This has raised a lot of debate about the inherent security, or lack of it, in Open Source Software.

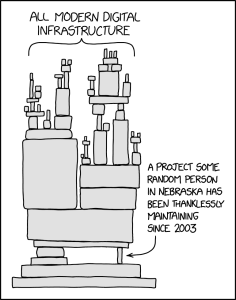

I have seen a number of developers making alternative versions of the famous XKCD cartoon 2347, demonstrating the extent to which much modern software is often now dependent on small libraries, often with a single maintainer. The fact that these are often Free and Open Source might be deducible by context. This comic was from August 2020 and itself likely referred to previous breaches. This shows that this isn't a new problem, and indeed, it's a bit of a nod to the extent to which Open Source Software has won the argument, and is ubiquitous in the foundations of many modern software stacks.

What *do* people claim about FOSS security?

The arguments raging online are more or less: is Open Source more secure, and do people claim it to be? Lots of developers are weighing in saying that no such claims are made. But that isn't really true, lots of allegations have been made on both sides of the argument.

Many years ago, I listened to a colleague being interviewed on the radio after a recent government web server breach to opine that such breaches were a predictable outcome of Open Source web servers being intrinsically less secure because attackers could analyse the source. Unfortunately for my colleague (and the public listening), the compromised web servers weren't running Open Source Software but the closed source Microsoft IIS. But the casual guess and assertion went unchallenged, and probably believed by most listeners.

On the other side of the argument, we have the Free Software Foundation, who have often argued for Open Source software being more potentially secure. The argument runs that Open Source Software must be secure by design, precisely because the code can be viewed, and therefore they cannot rely on secrecy and obfuscation - or at least secrets need to be carefully and separately handled from the code. By contrast, proprietary or closed source software at its worst can rely on security through obscurity - relying on the lack of availability of the code to the public to prevent attacks.

Another contribution to the debate is Linus's Law.

"given enough eyeballs, all bugs are shallow"

Eric S Raymond - The Cathedral and the Bazaar (1999)

The notion here is that many people have access to the source code, and so bugs are likely to be found and that includes security bugs and - in theory - deliberate backdoors. Certainly there is a lot of evidence for this given that many bugs are found promptly in the FOSS ecosystem. But still - some bugs have lain undiscovered for many years, and just because in theory many eyes can see source code it doesn't ensure that they will.

Comparisons with the data that isn't there

I confess, I have found this particular (xz) saga really shocking, because I have seen lots of accidental and sometimes incredibly serious security flaws in FOSS discovered over the years as a result of mistakes in the code, but this is clearly a malicious act which didn't just exploit the FOSS ecosystem, but the flaw the XKCD cartoon really focuses on - the fact that this work is often thankless and down to one individual. The social mechanisms by which the bad actor gained trust into the maintainer space are really troubling - as is the stress experienced by the maintainer.

But - as in my recent blog article about the phenomenon of survivor bias (albeit that was in the education space) - we have to pay attention to the data that is not available. We can see all of this "mess" precisely become the FOSS paradigm is open.

"We will not hide problems."

Point 3 of the Debian Social Contract.

The Debian Social Contract above also contained the Debian Free Software Guidelines, which were the foundation of the Open Source Definition. Debian certainly isn't the only Open Source project in town, but an influential one, and few Open Source projects would have contrary views to these.

In other words, we have spectacular news stories about Open Source security breaches precisely because we know about them. We often get no insight into security issues identified in closed source products and if and how they are identified, and if, how and when they are patched. It isn't hard to imagine such a serious problem being found in a closed source product and being patched at a more leisurely pace because stakeholders can't see the issue or the resolution.

It would also be unwise to assume that no hostile actors seek to gain access and involvement into prominent closed source products.

The way ahead

We can't and shouldn't be naive about the threats to the Open Source space.

Free Software means Free as in Freedom, not Free of Charge - we need to have a serious conversation as an industry about the flows of money from projects dependent on a software library to those maintaining the library itself. This doesn't happen routinely, and we need to wake up to the reality that this alone creates an attack vector against under resourced and overwhelmed maintainers. Software in the Public Interest do great work for large projects, but these smaller dependencies are largely a gap in the model.

Audit has weaknesses - in theory, Open Source projects demand clear code and "deliberately obfuscated source code is not allowed". As a result, not only the library maintainer, but the package maintainers for various Linux distributions (or similar) may spot attacks. However, this particular attack used a very subtle form of obfuscation relying on "test" files within the source code archives. While we can expect this to be looked at more closely in future, in practice opaque data can often legitimately exist within projects.

This attack was stopped - while it makes a little technical reading for people not in the FOSS developer space, the email which uncovered the attack written by Andres Freund is a carefully considered throrough examination by someone who makes it clear they are not a security researcher. But with the access to tools and code, this user was able to piece together the evidence of the attack and how it progressed.

In summary - closed source ecosystems are probably not the solution here - at least not for such components that are relied upon by many other developers. Yes, we don't yet know if there are similar backdoors and hacks in the Open Source ecosystem, but we can look for and potentially discover them. We can't know that a similar socially engineered attack in a closed source ecosystem would not have succeeded and has not succeeded, and we wouldn't be able to track it down in the same way. Even for the industry developing proprietary closed source software - being able to inspect your dependencies is likely to be important.

The solution seems at once simpler, and more difficult - we have to properly resource development of components that we rely upon. This is the conversation we should be having as an industry.