Budgeting for Assessment

Workloads for Academics in Higher Education are often very complex, with teaching loads, research tasks and administration all juggling for our attention with lots of task switching adding to the complexity. For many academics, teaching loads are a significant part of their work, but explicitly looking at the time spent on assessment could bring better […]

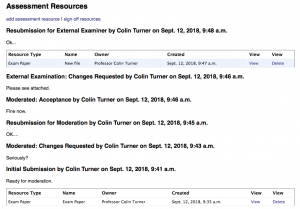

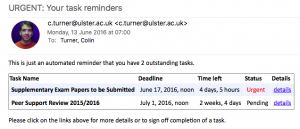

Assessment handling and Assessment Workflow in WAM

Sometime ago I began writing a Workload Allocation Modeller aimed at Higher Education, and I've written some previous blog articles about this. As is often the way, the scope of the project broadened and I found myself writing in support for handling assessments and the QA processes around them. At some point this necessitates a […]

Workload Allocation Modelling Update - Scalability

I have been doing some more work on my software to handle Academic Workload Modelling, developing a roadmap for two future versions, one being modifications needed to run real allocations for next year without scrapping existing data, and another being code to handle the moderation of exams and coursework (which isn't really anything to do […]